Composable Hardware on Chameleon NOW!

Introducing new GigaIO nodes with A100 GPUs

- Aug. 19, 2024 by

- Mike Sherman

Composable hardware in Chameleon!

We're excited to announce the availability of GigaIO's composable hardware at both CHI@UC and CHI@TACC.

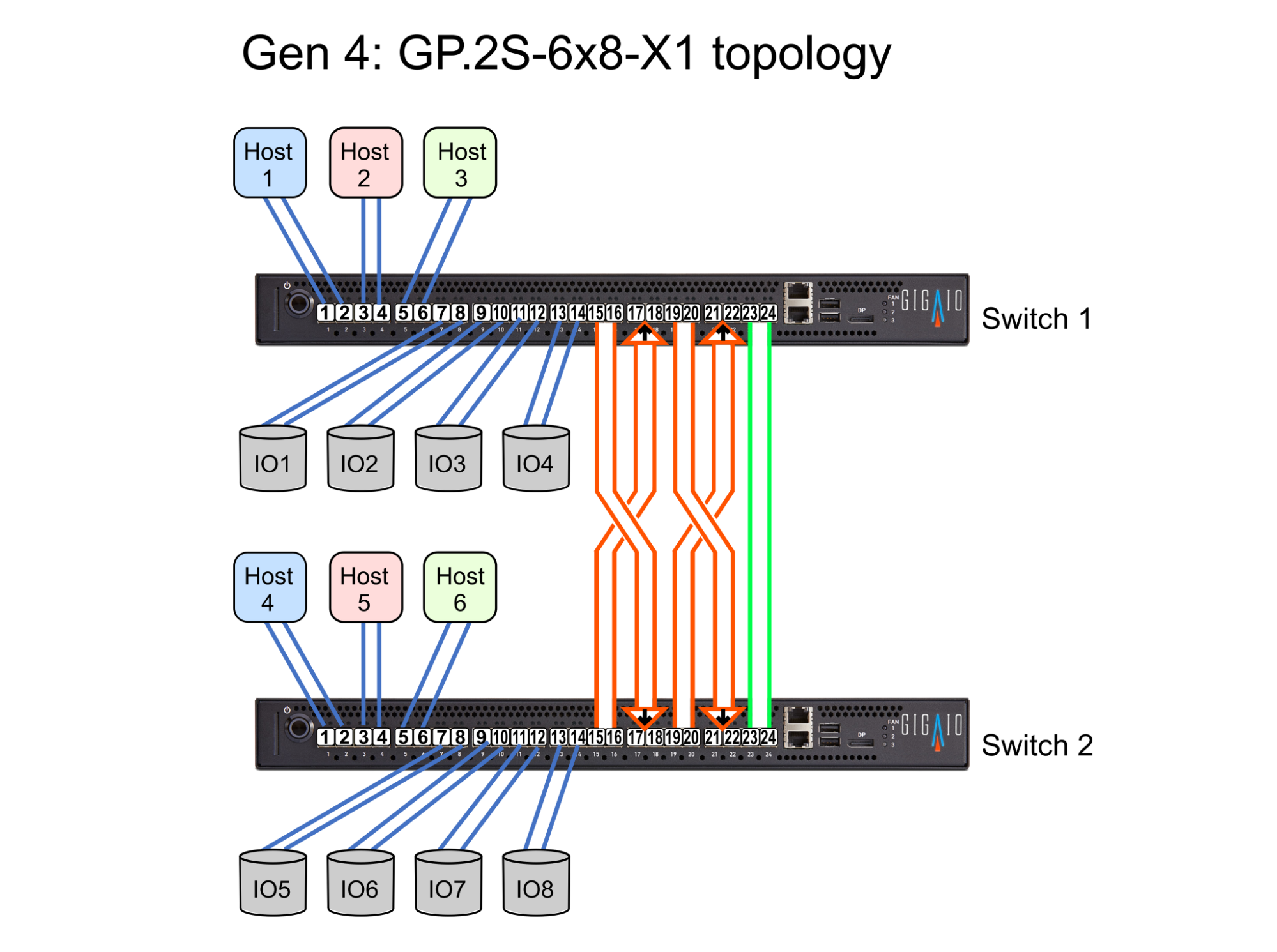

These systems consist of a set of bare metal nodes, accelerators, and a configurable PCIe backplane to connect them. GigaIO's own overview can be found here.

Composable hardware enables more flexibility in how a node is configured. For example, at UC the system currently consists of 8 nodes and 8 A100 GPUs. By default, each node is connected to 1 GPU, but it is possible to connect up to all 8 GPUs to a single node, or any combination in between. This means that we can now support configurations that would have been too expensive before (scaling with 8 GPUs), as well as improving utilization of the available hardware (an experiment with 2 GPUs and one with 6 GPUs can be run at the same time).

What you can do with it!

The backplane is technically a fabric of interconnected PCIe Switches but can be thought of as a larger "Virtual Switch", allowing any of the accelerators to be attached to any node, where it will appear to the OS as if directly connected.

Due to the complexity of reconfiguring not just "GPU 1 to Node 4", but provisioning a path through the switch fabric, we do not have an API for reconfiguration available directly. Instead, each node's "default" configuration is available in the reference-api and for reservation. If you would like to reserve 4 GPUs and 1 node, you'll instead need to reserve all 4 nodes, since they each "come with" one GPU. After making your reservation, please submit a helpdesk ticket, and we will trigger the re-composition of the resources when your reservation starts.

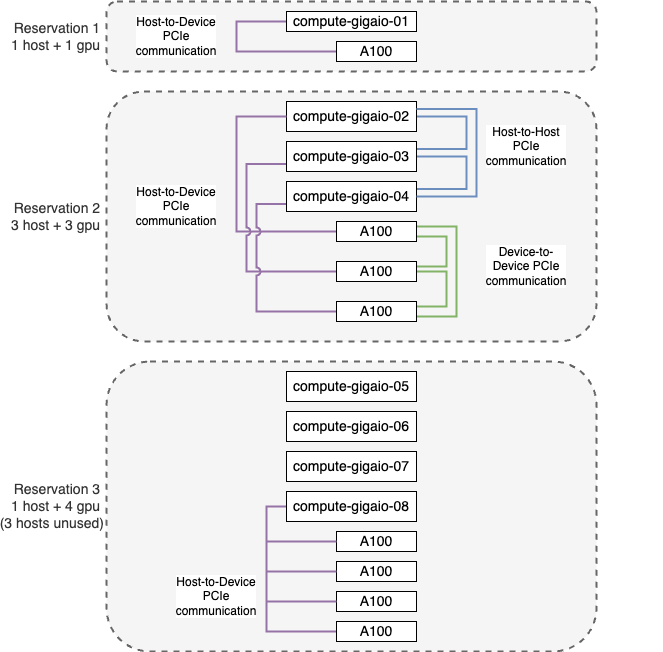

The diagram below shows some example configurations and the corresponding reservations.

Note: The 2x 25G Ethernet connections are not shown.

As compared to the existing LIQID deployment at TACC, the GigaIO systems support host-host and device-device communication, not just host-device. (Host-host is in beta and requires specific kernel support.)

- In Device-Device mode, this allows technologies with Nvidia's GPUDirect "peer to peer" mode to operate, enabling memory access, transfers, and synchronization between GPUs without going through the CPU or memory controller of the host system. For more details, see GigaIO/Nvidia's whitepaper on this mode.

- In Host-Host mode, it is possible to use the PCIe connection as a lossless data transport, similar to InfiniBand, and e.g. MPI jobs can leverage the high bandwidth and low latency. Additionally, this mode allows DMA between servers, both for memory pooling, and for access to I/O and peripherals.

Hardware in CHI@UC:

Servers

8 Dell R6525 servers, with node_type "compute_gigaio"

- 2x AMD EPYC 7763 64-Core Processors, for a total of 256 threads

- 512 GB of RAM

- 2x 480GB SATA SSDs

- 2x 25G NICs

- 8x lanes of PCIe 4.0 to the backplane: (128 Gb/s) Half Duplex, (256 Gb/s) Full Duplex

Accelerators

8 Nvidia A100 PCIe GPUs, with:

- 80 GB RAM

- 8x lanes of PCIe 4.0 to the backplane: (128 Gb/s) Half Duplex, (256 Gb/s) Full Duplex

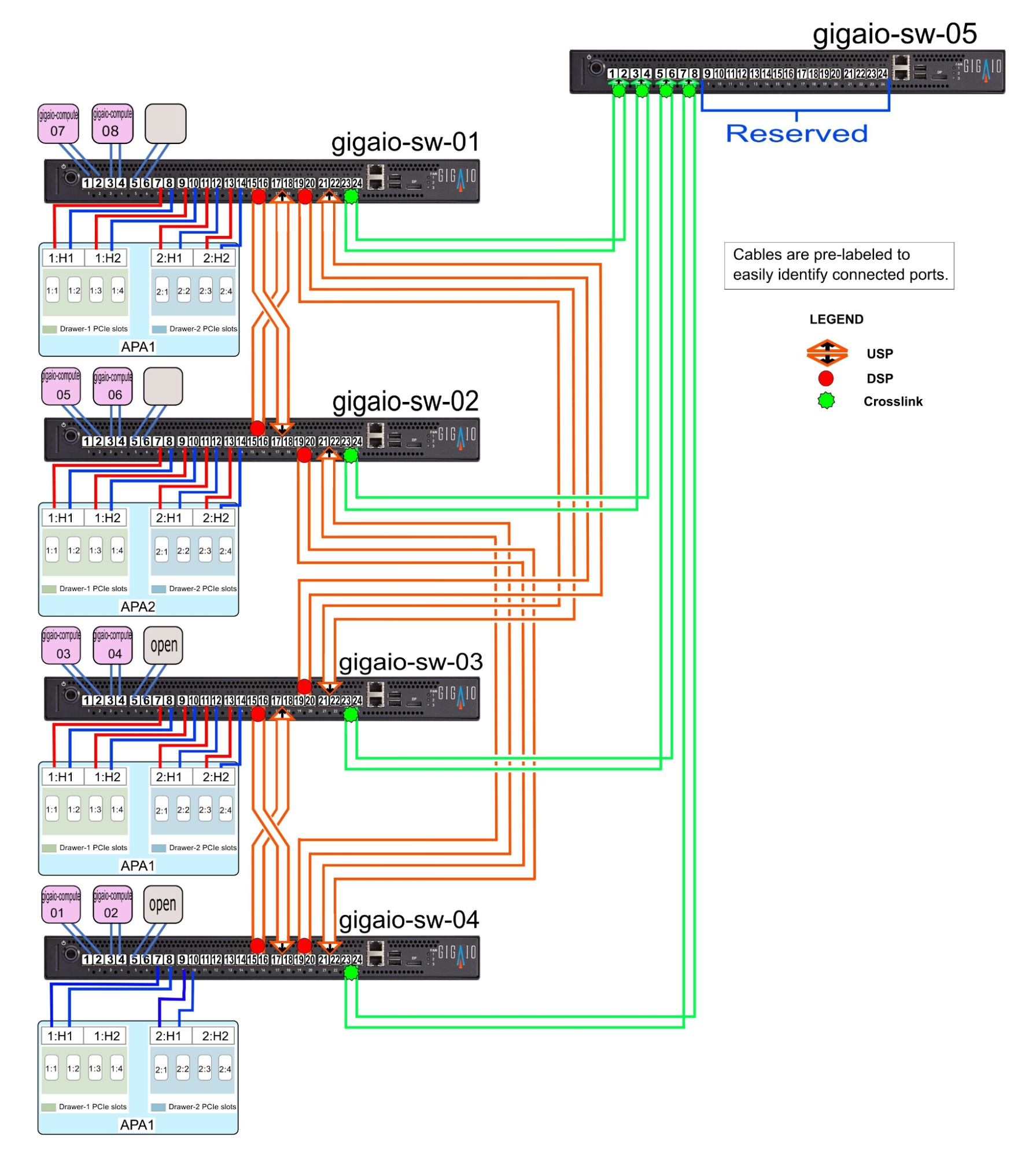

Connectivity

Hardware in CHI@TACC:

GigaIO:

6 PowerEdge R650 nodes, of node type "compute_gigaio"

- Intel Xeon Platinum 8380

- 256 GB RAM

- 1x 40 GbE NIC

- 1x 480 GB SATA SSD

- 8x lanes of PCIe 4.0 to the backplane: (128 Gb/s) Half Duplex, (256 Gb/s) Full Duplex

4 Nvidia A100-class PCIe GPUs

- 2x GA100 [A100 PCIe 40GB]

- 2x GA100GL [A30 PCIe]

LIQID:

8 PowerEdge R6525 nodes, of node type "compute_liqid"

- AMD EPYC 7763

- 256 GB RAM

- 1x 480 GB SATA SSD

- 16x lanes of PCIe 4.0 to the backplane

10 x GA100 [A100 PCIe 40GB]

16 x Samsung NVMe "Honeybadger" 3839 GB

Chameleon Testbed Secures $12 Million in Funding for Phase 4

Expanding Frontiers in Computer Science Research

- Aug. 1, 2024

We are thrilled to announce that Chameleon, our experimental testbed for computer science research, has been awarded $12 million in funding from the U.S. National Science Foundation (NSF) for its fourth phase. Four more years of Chameleon!

Chameleon Changelog for August 2022

- Sept. 1, 2022 by

- Mark Powers

We hope everybody has had a great vacation – welcome back, we are glad to see you again! In this month’s changelog, we bring you groundbreaking composable Liqid hardware at CHI@TACC, updated categorization for projects, appliance news, and Xena upgrade at CHI@NU. Additionally, we have a reminder for a scheduled outage of our authentication service, and important notes on using our A100 hardware.

No comments