Announcing FAST 2026 Bird-of-Feather (BoF) Session on Reproducibility

Our Reproducibility Ambassadors Are Heading to FAST '26 — Here's What to Expect

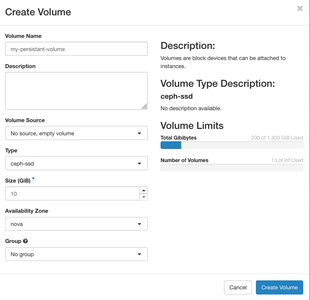

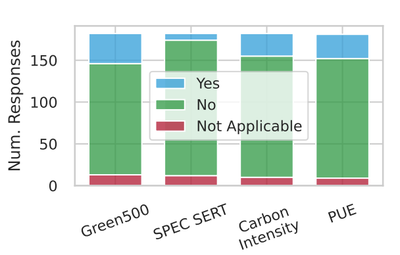

Two PhD students selected through the Reproducibility Ambassador program funded by the NSF REPETO project are heading to FAST '26 to share how researchers can package and reproduce their experiments using Chameleon Trovi. Join them on February 24th and 25th to see it in action.